So, I am happily adding NFS storage to an ESXi host. Then, suddenly, I cannot add any more NFS mounts! What is VMware doing? A little investigation turns up a default limitation of eight NFS mounts. This can be easily increased, using the steps described below. Note that if you increase NFS.maxVolumes, you should consider tweaking Net.TcpipHeapSize because it should be set to approximately 1MB per NFS mount.

- Open Advanced Settings:

- Login to the vSphere client

- Highlight the ESXi host you want to edit

- Goto the Configuration tab

- Click the “Advanced Settings” link, which is the bottom-most link

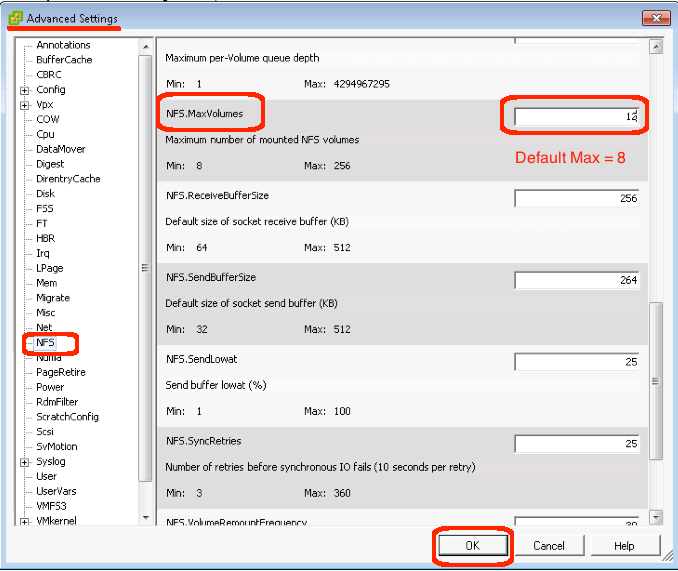

- Change the NFS.maxVolumes setting:

- In the “Advanced Settings” dialog box…

- Click NFS from the left-hand tree

(Note: the parameters are listed in alphabetical order) - Scroll down to NFS.MaxVolumes

- Enter a valid value between 8 and 256

- Click OK to save your changes

- Consider editing Net.TCPipHeapSize:

- Back in the “Advanced Settings” dialog box…

- Click Net from the left-hand tree

- Scroll to Net.TcpipHeapSize

- Calculate 1MB per NFS mount (e.g. 64MB if you defined 64 NFS mounts)

- Enter a valid value and click OK to save your changes

I have noticed that most implementations were previously iSCSI over fiber-channel. Now, many customers select NFS over ethernet because (a) ethernet network cards and cabling is less expensive (b) they already have familiarity with ethernet network switches and routers and (c) NFS mounts are simpler to define and use. As this happens, more administrators are going to hit this VMware default and need to alter it for their implementations.